What Is A Large Language Model And How Does It Work ?

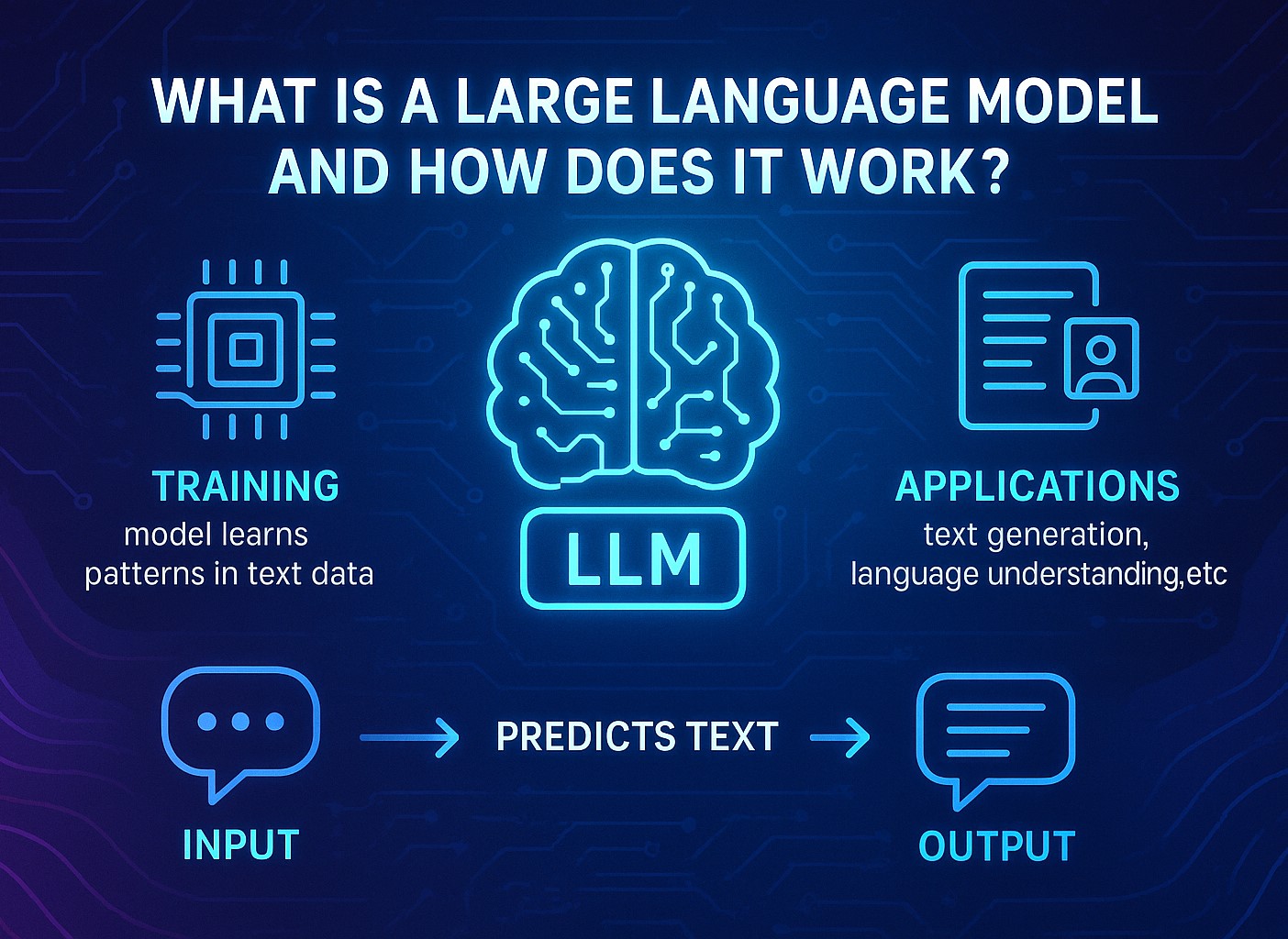

In today’s world of artificial intelligence (AI), large language models (LLMs) have become essential tools in natural language processing. These powerful systems can understand, generate, and interact using human language in ways that were once thought impossible. This article explores what a large language model is, how it works, and how it is shaping the future of AI applications.

What Is a Large Language Model?

A large language model is a type of AI system designed to understand and generate human language. It is trained on massive datasets containing text from books, articles, websites, and other written content. These models are called “large” because of the enormous number of parameters they use—often billions or even trillions.

A parameter in this context is a mathematical value that helps the model make decisions about language. The more parameters a model has, the better it can understand complex sentence structures, word meanings, and contextual cues.

LLMs are a specific subset of neural networks, particularly based on the transformer architecture. This design allows the model to pay attention to different parts of a sentence or paragraph, giving it a more comprehensive understanding of meaning and intent.

The Basics of How It Works

To grasp how a large language model functions, let’s break the process down into three key stages: training, fine-tuning, and inference.

1. Training Phase

The first stage involves feeding the model a large amount of text data. This phase is called pre-training. The goal is to teach the model the statistical patterns in language. For example, if the phrase is “The cat sat on the ___,” the model learns that “mat” is a likely word to come next.

During training, the model doesn’t understand the meaning of words like a human does. Instead, it learns the relationships between words based on how often they appear together. It predicts the next word in a sequence using probability and context.

This phase requires high computational power and specialized hardware, such as graphics processing units (GPUs) or tensor processing units (TPUs), because it involves billions of calculations.

2. Fine-Tuning Phase

After the base model is trained, it may go through a fine-tuning phase. This involves training the model on more specific datasets to improve its performance for certain tasks. For example, it can be fine-tuned to perform well in legal writing, medical questions, or customer service interactions.

Fine-tuning allows the model to become more accurate, reduce biases, and adapt to particular use cases. This step often includes supervised learning, where human-labeled data helps guide the model toward the correct responses.

3. Inference Phase

Once the model is trained and fine-tuned, it is ready for use. This is known as inference. During this stage, users interact with the model by entering text, and the model responds with a prediction based on its training.

For instance, if a user types, “Write a short poem about the ocean,” the model uses what it has learned to generate a poem that matches the theme and structure of typical ocean-related poems found in its training data.

The Role of Transformers in LLMs

The core technology behind most LLMs today is the transformer architecture. Introduced in a 2017 research paper, transformers changed how AI processes sequences of data.

Transformers use a mechanism called “attention,” which allows the model to focus on important words in a sentence, even if they’re far apart. For example, in the sentence “The book that John borrowed last week was fascinating,” the transformer architecture helps the model understand that “book” is the subject of “was fascinating,” even though other words are in between.

This architecture enables LLMs to handle longer texts, maintain context, and understand nuances in language more effectively than previous models.

Common Applications of Large Language Models

LLMs are used in a wide range of industries and applications. Some of the most common uses include:

1. Text Generation

LLMs can generate new content such as articles, reports, stories, and emails. This feature is especially useful in content creation, journalism, and creative writing.

2. Language Translation

By understanding the structure of multiple languages, LLMs can translate text from one language to another with high accuracy, improving global communication and access to information.

3. Sentiment Analysis

Businesses use LLMs to analyze customer feedback, reviews, or social media posts to determine public sentiment and respond effectively.

4. Question Answering

LLMs can provide answers to factual or conversational questions, offering a more natural and interactive user experience in customer service, education, and research.

5. Code Generation

Programmers use LLMs to write or complete code snippets in various programming languages, increasing productivity and reducing human error.

Benefits of Using Large Language Models

There are several advantages to using LLMs:

-

Scalability: Once trained, an LLM can serve millions of users at the same time.

-

Speed: These models can generate high-quality responses within seconds.

-

Adaptability: They can be fine-tuned for specific industries or tasks.

-

Cost Efficiency: Over time, LLMs can reduce the need for human labor in repetitive language tasks.

Limitations and Ethical Considerations

Despite their benefits, LLMs are not without challenges:

-

Bias in Training Data: Since LLMs learn from public data, they can sometimes reflect societal biases found in that data.

-

Misinformation: The models may generate content that sounds plausible but is factually incorrect.

-

Data Privacy: There are concerns over the use of copyrighted or sensitive material during training.

-

Energy Consumption: Training large models requires a significant amount of energy, raising environmental concerns.

To address these issues, researchers and developers are working on improving model transparency, developing safer training practices, and implementing ethical guidelines.

The Future of Large Language Models

The evolution of LLMs is ongoing. Future models are expected to be:

-

More Efficient: Reducing the size and power consumption while maintaining performance.

-

Multimodal: Integrating text, images, audio, and video for richer interactions.

-

Customizable: Allowing users or businesses to create personalized models.

-

Collaborative: Supporting workflows where humans and AI co-create solutions in real time.

As the field grows, LLMs will continue to expand their impact across education, healthcare, law, science, and entertainment.

Conclusion

Large language models are transforming how we interact with machines. By learning patterns in vast amounts of text, they can understand, generate, and translate language with incredible precision. From writing code to composing poetry, LLMs are opening new doors in productivity and creativity.

Understanding how these models work helps users apply them responsibly and efficiently. As AI continues to evolve, large language models will play a key role in shaping the future of technology and human communication.

Key Takeaways

-

LLMs are AI models trained on vast text datasets.

-

They use transformer architecture to understand and generate human-like language.

-

Common applications include translation, content creation, and customer support.

-

While powerful, LLMs require ethical use due to potential biases and environmental impact.

-

The future of LLMs points toward more personalized, multimodal, and efficient systems.

Reference

https://en.wikipedia.org/wiki/Large_language_model

Link License – https://en.wikipedia.org/wiki/Wikipedia:Text_of_the_Creative_Commons_Attribution-ShareAlike_4.0_International_License

Dear Friends, I am delighted to invite you to visit the link below to discover more tech articles. Thanks For Your Support.

https://techsavvo.com/category/blog/